Shortcomings of todays human-computer interaction

I have spent a lot of time in front of a computer. That’s because you can do a lot of amazing stuff with it. I don’t think I enjoy the process that much though. Sometimes there is so much clicking and pointing to do simple stuff. I don’t think I would code without using VIM or at least adding VIM bindings to the text editor I’m using. I just can’t use mouse when I code. Coding is discrete. Smallest unit of code is a letter. If you use the mouse to move the cursor in your text editor you actually move over space that doesn’t interest you, like letters themselves. What you are actually after are the spaces between letters. Using only keyboard puts you in this discrete space you are after - with keyboard you can move exactly one letter at a time by adding, removing or moving over a letter. VIM bindings expand this by giving you motions, simplest being moving over words. When you code, your tool is the keyboard. We all know how keyboard looks and what should it do… do we?

So that’s the keyboard I’m using. It’s a custom keyboard made by OLKB. It’s ortholinear meaning there is no slant with each consecutive row. It also uses layers to compensate for the smaller keys count - you hold one button and all other buttons change their role (you can reprogram what each key does). One of the main ideas behind this keyboard is that it’s small and that minimizes hand movements and makes writing more efficient. Is this THE way to go for keyboards? Of course not. It’s a specialized tool. Even though I use it for everything it’s not ideal for everything. It also wouldn’t work for every coder. There are other options.

Always thought ErgoDox seems interesting with the two halfs and the separate sections for thumbs.

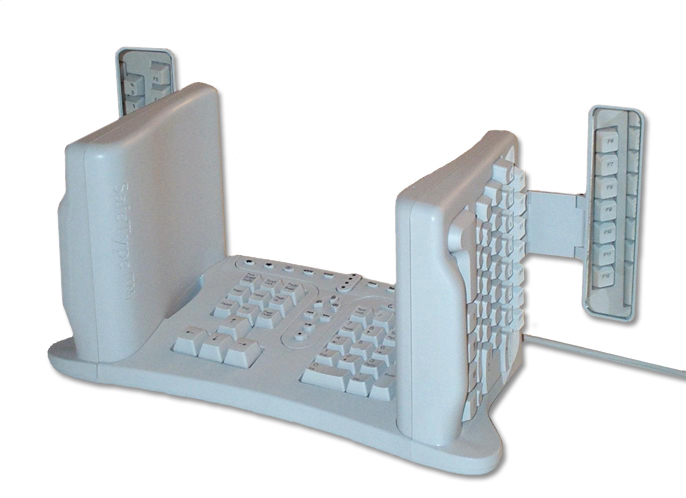

There is this monstrosity… It has mirrors. Driving backwards shouldn’t be a problem.

Then there is TAP keyboard which allows you to type on any surface by just tapping your fingers. It uses accelerometers to analyse you fingers movements.

People are looking for improvements in typing. That’s great. We always strive for new ways to express what’s on our minds. Typing isn’t the only thing we do with computers. One field that is close to me is 3D graphic design. If you have ever used software like 3DsMax, Maya, Modo, Blender, ZBrush or even Autodesk’s Inventor you know how heavy on keyboard shortcuts those programs are. Even the 3D space navigation requires some keyboard buttons combination combined with mouse movement. Those programs are big and complicated. A lot of functionality isn’t available through the keyboard shortcuts. Sometimes you can bind your own shortcuts to a function, sometimes it’s not necessary because it’s something you use sporadically and sometimes adding a hotkey wouldn’t really solve the workflow issue you are facing.

Before I go further I will take a moment to explain what inspired me to write this post. On April 2014 Per Abrahamsen created a thread on the Polycount forum. You can find it here. In this thread he has shown a way to control 3DsMax’s material parameters with an Akai LPD 8 midi controller. I and others were kind of blown away. Instead of going through the UI, which requires a lot clicking, you just use knobs to change parameters of the material. That’s an example of something that can’t be improved upon with a simple hotkey binding. Also the values of a material are analog in nature (I know that nothing is analog in a computer…). Even when they are in 0-255 or 0-65535 range you usually don’t set specific values. You just move the sliders until the material looks good. That’s why a knob as a physical interface works so well in this scenario. You select an object and you turn the knob. That’s it. You don’t have to navigate to the window or a tab where the material is located. You don’t have to find the parameter you are looking for. You don’t have to use a mouse for that. You use a dedicated, specialised device to do that. It’s obvious you can’t make a hardware for every function of a program but that’s not what I’m advocating for. There is a space for creating tools that solve recurring problems in a specific field. Well… duuuuh. Of course there is. It’s just that I feel some fields are behind. I feel computer graphics field is behind.

Inspired by what Per Abrahamsen has shown I tried to implement something like that by myself. Below you can see how I did. I’m sorry for the quality of the recording. In short I have connected a potentiometer to an Atmega microcontroller and was sending its position via UART communication to the PC. Python script was receiving the data and passing it through a socket to Modo. Another Python script running in Modo was using the data to control the opacity of the object.

It was clunky. I have abandoned further experimentation. Until the last weekend. I learned a lot those 3 years. I was able to solder a prototype (this time on an Arduino not a raw Atmega chip), program it and write Python script to receive the data for Blender in around 2h. The result is much nicer than what I did 3 years prior with Modo.

This time I went for controlling 3 parameters of a material. Either RGB or HSV values. As you can see in the clip above you can toggle between those two. It’s just a really simple prototype and it has one major flaw. Potentiometers are absolute not relative. That means that when I set a color for a particular mesh, move to another mesh and try changing its color I will start from the color I applied to the previous one. That’s because potentiometers are in an absolute position corresponding to the color I have previously set. In order to solve this issue potentiometers would have to be switched for:

- encoders - those are like potentiometers in their form but they can rotate indefinitely. They do have those discrete steps when rotating. Each step could increase the value by 1 (that makes them relative as opposed to absolute potentiometers). That would mean a lot of turning… maybe by 5 then. That would divide the space you are moving in by 5. Not an ideal solution

- motorized potentiometers - just a potentiometer with a motor so when you switch to a different object potentiometers can align themselves with the values stored in this meshes material. That’s an expensive solution.

- motorized sliders - basically the same as motorized potentiometers but in a slider form factor.

I might explore the usage of motorized potentiometers one day. They are not only expensive but also big. I don’t like the idea of having a giant console for controlling some specific parameters.

One thing that makes a device like this exciting for me is binding a concept to a device. Basically each and every graphics program has a concept of color. Blender uses color in materials but also when you paint your meshes. In Photoshop you can also paint with a color. Now imagine this concept is attached to the device. When you are using Blender you can tune your materials or change the color you are painting with, when you switch to Photoshop you can use the same knobs for changing the color you want to paint with. You want to use Maya now? Well you know the drill. Of course this doesn’t end on the concept of color. There are other concepts that are shared between all of those apps, for example brush size. There is always a brush and it always has a size.

For me that would be a game changer. Is it possible? To a point. You can probably imagine that building a device that can talk with all of those programs is hard. It would require constant maintenance because programs change. In computer graphics a lot of those programs have built-in Python interpreter. That makes making projects like the once I showed you before possible but they are hacks in a way. Blender can run an UART communication module pretty well but that’s not how it should be done in a commercial product.

There are companies making dedicated hardware for computer graphics industry. At this point graphic tablets are basically a must have. One of the biggest companies in this field, Wacom is experimenting with making dedicated controllers like this one:

I just don’t think they are heading the right direction.

Then there is this project called PALETTE. I find it interesting. Since it supports MIDI protocol its output can be easily handled by a small piece of software on the PC.

Another one is BrushKnob. One knob and one button. I like the simplicity but the usability is limited.

People are searching for a better way to create. We are not even close to finding THE solution. One day we will probably interface our minds with the computer and then all of those projects will seem ridiculously primitive. For now lets add some more KNOBS!